Matrices and tensors - named, visualized¶

- Piotr Migdał

- founder at Quantum Flytrap / AI researcher at ECC Games

- p.migdal.pl, @pmigdal, github.com/stared

- PiterPy Online, 3-6 Aug 2020

Hi! / Привет!¶

What is the matrix?¶

“The Matrix is everywhere. It's all around us,” he explains to Neo.

In plain English? Well, it is a table with numbers.

Outline¶

(warning: spoilers)

- Pure Python -> NumPy -> Pandas

- Some cool visualizations

- Descent into deep learning

- A pinch of quantum

- Applause (or awkward silence)

%%html

<blockquote class="twitter-tweet"><p lang="en" dir="ltr">One (of many) amusing socially awkward remote conference call interactions is when the speaker makes a joke. Everyone is mutated so it seems like it awkwardly falls flat, and noone wants to unmute just to say "haha". Calls still need many more features to bridge the real life gap</p>— Andrej Karpathy (@karpathy) <a href="https://twitter.com/karpathy/status/1261345026119921664?ref_src=twsrc%5Etfw">May 15, 2020</a></blockquote> <script async src="https://platform.twitter.com/widgets.js" charset="utf-8"></script>

One (of many) amusing socially awkward remote conference call interactions is when the speaker makes a joke. Everyone is mutated so it seems like it awkwardly falls flat, and noone wants to unmute just to say "haha". Calls still need many more features to bridge the real life gap

— Andrej Karpathy (@karpathy) May 15, 2020

Pure Python¶

Does Python have support for matrices?

lol = [[1., 3., -1., 0.5, 2., 0.],

[4., 0., 2.5, -4., 2., 1.],

[1., 1., -1., 2, 2., 0.]]

lol stands for a list of lists. Nothing to laugh about.

# entry

lol[1][3]

-4.0

# row

lol[2]

[1.0, 1.0, -1.0, 2, 2.0, 0.0]

# column

[row[2] for row in lol]

[-1.0, 2.5, -1.0]

So¶

- You can use it

- But you shouldn't

Because¶

- Slow

- No easy way for typical operations

- No checks on numeric types

- Or even if it is a table or not

NumPy¶

Or the numerics backbone for Python.

import numpy as np

arr = np.array(lol)

arr

array([[ 1. , 3. , -1. , 0.5, 2. , 0. ],

[ 4. , 0. , 2.5, -4. , 2. , 1. ],

[ 1. , 1. , -1. , 2. , 2. , 0. ]])

# entry

arr[1, 3]

-4.0

# row

arr[1]

array([ 4. , 0. , 2.5, -4. , 2. , 1. ])

# columns

arr[:, 1]

array([3., 0., 1.])

# operations

arr[2] - arr[0]

array([ 0. , -2. , 0. , 1.5, 0. , 0. ])

# type

arr.dtype

dtype('float64')

Good parts¶

- Fast (don't ever believe "but C++")

- A lot of numeric features (using

scipy)

But¶

- What the hell the columns mean?!

Pandas¶

(Note to oneself: resist any pandemia puns!)

import pandas as pd

df = pd.DataFrame(arr,

index=['Sasha', 'Alex', 'Kim'],

columns=['sweet', 'sour', 'salty', 'bitter', 'spicy', 'sugar'])

df

| sweet | sour | salty | bitter | spicy | sugar | |

|---|---|---|---|---|---|---|

| Sasha | 1.0 | 3.0 | -1.0 | 0.5 | 2.0 | 0.0 |

| Alex | 4.0 | 0.0 | 2.5 | -4.0 | 2.0 | 1.0 |

| Kim | 1.0 | 1.0 | -1.0 | 2.0 | 2.0 | 0.0 |

df.loc['Alex'].mean()

0.9166666666666666

df.loc['Alex', 'sweet']

4.0

df.loc[:, 'sweet']

Sasha 1.0 Alex 4.0 Kim 1.0 Name: sweet, dtype: float64

df.sweet

Sasha 1.0 Alex 4.0 Kim 1.0 Name: sweet, dtype: float64

Do we need anything more?¶

Hint¶

You think you own whatever data you loaded

The Matrix is just a dead thing you can claim

But I know every frame and row and column

Has a type, has an API, has a name

Answer¶

Colors (of the wind)!

Seaborn¶

import seaborn as sns

sns.heatmap(df, cmap='coolwarm');

sns.heatmap(df, cmap='coolwarm', annot=True);

sns.clustermap(df, cmap='coolwarm', annot=True);

Or even interactively¶

Using clustergrammer - interactive hierarchically clustered heatmaps, mostly for biology.

from clustergrammer2 import Network, CGM2

net = Network(CGM2)

net.load_df(df)

>> clustergrammer2 backend version 0.15.0

net.widget()

So, we are done?¶

(from Deep Learning with PyTorch)

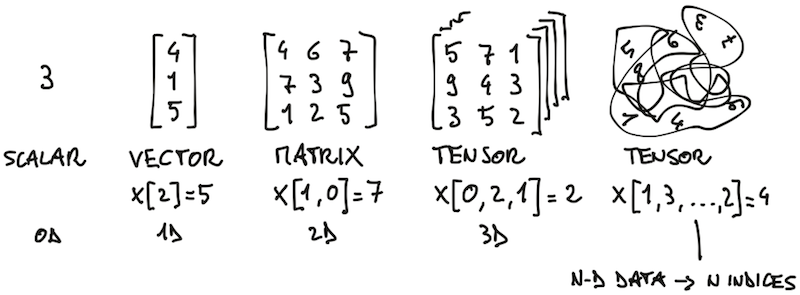

OK, but do we actually need it?¶

(except for some super-advanced physics)

Yes, for colors! RGB(A) channels.

- width, height, channel

In deep learning, we process a few images at the same time:

- sample, height, width, channel

- sample, channel, height, width

- sample, channel, width, height

- ???

So, let's memorize that!¶

Well, for sure we can learn the order of dimensions. Practice makes perfect!

Then see it:

- OpenCV:

HWC/HW - Pillow:

HWC/HW - Matplotlib:

HWC/HW - Theano:

NCHW - TensorFlow:

NHWC - PyTorch:

NCHW

Is it only me, or does the Theano tensor dimension order sounds like some secret convent?

In PyTorch there are Inconsistent dimension ordering for 1D networks - NCL vs NLC vs LNC.

Tensors in PyTorch¶

Full disclaimer: I love PyTorch.

import torch

x = torch.tensor([[

[[1., 0., 1.],

[0., 1., 0.],

[1., 0., 1.]],

[[0., 1., 0.],

[1., 1., 1.],

[0., 1., 0.]],

[[1., 1., 1.],

[1., 0., 1.],

[1., 1., 1.]],

]])

x

tensor([[[[1., 0., 1.],

[0., 1., 0.],

[1., 0., 1.]],

[[0., 1., 0.],

[1., 1., 1.],

[0., 1., 0.]],

[[1., 1., 1.],

[1., 0., 1.],

[1., 1., 1.]]]])

x.dtype

torch.float32

x.size()

torch.Size([1, 3, 3, 3])

x.mean(1)

tensor([[[0.6667, 0.6667, 0.6667],

[0.6667, 0.6667, 0.6667],

[0.6667, 0.6667, 0.6667]]])

Named tensors¶

Starting from PyTorch 1.4.0, there is support for dimension names in tensors.

However, still at PyTorch 1.6.0 it is an experimental feature.

x = x.rename('N', 'C', 'H', 'W')

x

2020-07-30 22:47:30,325 [96730] WARNING py.warnings:110: [JupyterRequire] /opt/anaconda3/lib/python3.7/site-packages/torch/tensor.py:723: UserWarning: Named tensors and all their associated APIs are an experimental feature and subject to change. Please do not use them for anything important until they are released as stable. (Triggered internally at /Users/distiller/project/conda/conda-bld/pytorch_1595629430416/work/c10/core/TensorImpl.h:840.) return super(Tensor, self).rename(names)

tensor([[[[1., 0., 1.],

[0., 1., 0.],

[1., 0., 1.]],

[[0., 1., 0.],

[1., 1., 1.],

[0., 1., 0.]],

[[1., 1., 1.],

[1., 0., 1.],

[1., 1., 1.]]]], names=('N', 'C', 'H', 'W'))

x.mean('C')

tensor([[[0.6667, 0.6667, 0.6667],

[0.6667, 0.6667, 0.6667],

[0.6667, 0.6667, 0.6667]]], names=('N', 'H', 'W'))

x.mean(('H', 'W'))

tensor([[0.5556, 0.5556, 0.8889]], names=('N', 'C'))

x.transpose('H', 'W')

tensor([[[[1., 0., 1.],

[0., 1., 0.],

[1., 0., 1.]],

[[0., 1., 0.],

[1., 1., 1.],

[0., 1., 0.]],

[[1., 1., 1.],

[1., 0., 1.],

[1., 1., 1.]]]], names=('N', 'C', 'W', 'H'))

x.flatten(['C', 'H', 'W'], 'features')

tensor([[1., 0., 1., 0., 1., 0., 1., 0., 1., 0., 1., 0., 1., 1., 1., 0., 1., 0.,

1., 1., 1., 1., 0., 1., 1., 1., 1.]], names=('N', 'features'))

m1 = torch.tensor([[1., 2.], [3., 4.]], names=('H', 'W'))

m2 = torch.tensor([[0, 1.], [1., 0.]], names=('W', 'H'))

m1 + m2

--------------------------------------------------------------------------- RuntimeError Traceback (most recent call last) <ipython-input-34-92db99ada483> in <module> ----> 1 m1 + m2 RuntimeError: Error when attempting to broadcast dims ['H', 'W'] and dims ['W', 'H']: dim 'W' and dim 'H' are at the same position from the right but do not match.

Awesome! Let's use the names in neural networks!¶

from torch import nn

x_input = torch.rand((4, 4), names=('N', 'C'))

x_input

tensor([[0.1011, 0.0956, 0.6034, 0.8772],

[0.4385, 0.1957, 0.4768, 0.3740],

[0.5953, 0.7032, 0.9211, 0.8834],

[0.6115, 0.4057, 0.1443, 0.0208]], names=('N', 'C'))

# a dense (fully-connected) layer

fc = nn.Linear(in_features=4, out_features=2)

fc(x_input)

tensor([[-0.1660, -0.4661],

[-0.1843, -0.0722],

[-0.1989, -0.7008],

[-0.3339, 0.2273]], grad_fn=<AddmmBackward>, names=('N', None))

It only sort of works. And it gets worse.

x_input_2d = torch.rand((4, 3, 4, 4), names=('N', 'C', 'H', 'W'))

conv = nn.Conv2d(in_channels=3, out_channels=2, kernel_size=3)

conv(x_input_2d)

--------------------------------------------------------------------------- RuntimeError Traceback (most recent call last) <ipython-input-39-3a9e151a763e> in <module> 1 x_input_2d = torch.rand((4, 3, 4, 4), names=('N', 'C', 'H', 'W')) 2 conv = nn.Conv2d(in_channels=3, out_channels=2, kernel_size=3) ----> 3 conv(x_input_2d) /opt/anaconda3/lib/python3.7/site-packages/torch/nn/modules/module.py in _call_impl(self, *input, **kwargs) 720 result = self._slow_forward(*input, **kwargs) 721 else: --> 722 result = self.forward(*input, **kwargs) 723 for hook in itertools.chain( 724 _global_forward_hooks.values(), /opt/anaconda3/lib/python3.7/site-packages/torch/nn/modules/conv.py in forward(self, input) 417 418 def forward(self, input: Tensor) -> Tensor: --> 419 return self._conv_forward(input, self.weight) 420 421 class Conv3d(_ConvNd): /opt/anaconda3/lib/python3.7/site-packages/torch/nn/modules/conv.py in _conv_forward(self, input, weight) 414 _pair(0), self.dilation, self.groups) 415 return F.conv2d(input, weight, self.bias, self.stride, --> 416 self.padding, self.dilation, self.groups) 417 418 def forward(self, input: Tensor) -> Tensor: RuntimeError: aten::mkldnn_convolution is not yet supported with named tensors. Please drop names via `tensor = tensor.rename(None)`, call the op with an unnamed tensor, and set names on the result of the operation.

Named neural networks¶

A very experimental package: github.com/stared/pytorch-named-dims by Bartłomiej Olechno and me, from ECC Games.

Inspired by:

- Quantum Tensors JS by Piotr Migdał

- Tensor Considered Harmful by Alexander Rush

Not yet on PyPI, but you can install directly from the repo:

pip install git+git://github.com/stared/pytorch-named-dims.git

import torch

from torch import nn

from pytorch_named_dims import nm

convs = nn.Sequential(

nm.Conv2d(3, 5, kernel_size=3, padding=1),

nn.ReLU(), # preserves dims on its own

nm.MaxPool2d(2, 2),

nm.Conv2d(5, 2, kernel_size=3, padding=1)

)

x_input = torch.rand((2, 3, 2, 2), names=('N', 'C', 'H', 'W'))

convs(x_input)

tensor([[[[-0.0050]],

[[ 0.0938]]],

[[[-0.0194]],

[[ 0.1004]]]], grad_fn=<AliasBackward>, names=('N', 'C', 'H', 'W'))

x_input = torch.rand((2, 3, 2, 2), names=('N', 'C', 'W', 'H'))

convs(x_input)

--------------------------------------------------------------------------- ValueError Traceback (most recent call last) <ipython-input-42-45ac457561ee> in <module> 1 x_input = torch.rand((2, 3, 2, 2), names=('N', 'C', 'W', 'H')) ----> 2 convs(x_input) /opt/anaconda3/lib/python3.7/site-packages/torch/nn/modules/module.py in _call_impl(self, *input, **kwargs) 720 result = self._slow_forward(*input, **kwargs) 721 else: --> 722 result = self.forward(*input, **kwargs) 723 for hook in itertools.chain( 724 _global_forward_hooks.values(), /opt/anaconda3/lib/python3.7/site-packages/torch/nn/modules/container.py in forward(self, input) 115 def forward(self, input): 116 for module in self: --> 117 input = module(input) 118 return input 119 /opt/anaconda3/lib/python3.7/site-packages/torch/nn/modules/module.py in _call_impl(self, *input, **kwargs) 720 result = self._slow_forward(*input, **kwargs) 721 else: --> 722 result = self.forward(*input, **kwargs) 723 for hook in itertools.chain( 724 _global_forward_hooks.values(), /opt/anaconda3/lib/python3.7/site-packages/pytorch_named_dims/utils/__init__.py in forward(self, *args, **kwargs) 183 def forward(self, *args, **kwargs): 184 for x, names_in_i in zip(args, self.names_in): --> 185 names_middle = split_and_compare(names_in_i, list(x.names), layer_name=self._get_name()) 186 args = [x.rename(None) for x in args] 187 x = super().forward(*args, **kwargs) /opt/anaconda3/lib/python3.7/site-packages/pytorch_named_dims/utils/__init__.py in split_and_compare(names_in, x_names, layer_name) 86 # case of no '*' 87 if not names_compatible(names_in, x_names): ---> 88 raise ValueError(error_msg) 89 return [] 90 else: ValueError: Layer NamedConv2d requires dimensions ['N', 'C', 'H', 'W'] but got ['N', 'C', 'W', 'H'] instead.

Still, it is as permissive and accepting as Python! 🐍❤️

x_input = torch.rand((2, 3, 2, 2), names=('N', 'C', None, None))

convs(x_input)

tensor([[[[-0.0262]],

[[ 0.0994]]],

[[[ 0.0190]],

[[ 0.0969]]]], grad_fn=<AliasBackward>, names=('N', 'C', 'H', 'W'))

Quantum mechanics¶

Deep learning (so called "AI") is difficult?

Let's try quantum mechanics!

It is not thaaat bad - see Quantum mechanics for high-school students and play the Quantum Game with Photons.

In quantum mechanics, we

- use complex numbers,

- there are as many dimensions as particles,

- for operations - twice as much.

# BTW: complex numbers are even in plain Python

(1 - 2j) * (3 + 1j)

(5-5j)

QuTiP¶

QuTiP: Quantum Toolbox in Python, perhaps the easiest way to approach quantum mechanics with Python.

A few examples of Qubism visualizations to show quantum states, based on Qubism: self-similar visualization of many-body wavefunctions, New J. Phys. 14 053028 (2012).

from qutip import ket, plot_schmidt

singlet = (ket('01') - ket('10')).unit()

plot_schmidt(singlet, figsize=(3, 3));

separable = (ket('01') - ket('00')).unit()

plot_schmidt(separable, figsize=(3, 3));

ghz4 = (ket('0000') + ket('1111')).unit()

plot_schmidt(ghz4, figsize=(4, 4));

state = (ket('0101') + ket('1011')).unit()

plot_schmidt(state, figsize=(4, 4));

Bra Ket Vue ⟨𝜑|𝜓⟩.vue¶

Bra Ket Vue a Vue-based visualization of quantum states and operations.

A very new one - we released that with Klem Jankiewicz this May!

This slide is intentionally empty.

Tried to use JavaScript code in Jupyter, but I failed (again).

Instead: I will use JSFiddle.

%%html

<script async src="//jsfiddle.net/stared/57b231yu/embed/"></script>

%%html

<script async src="//jsfiddle.net/stared/kc0de19n/embed/"></script>

%%html

<script async src="//jsfiddle.net/stared/0t3beofr/embed/"></script>

%%html

<script async src="//jsfiddle.net/stared/Lx7fn2r1/embed/"></script>

%%html

<script async src="//jsfiddle.net/stared/ryux9pcw/embed/"></script>

Thanks! Спасибо!¶

p.migdal.pl, @pmigdal, github.com/stared

- training neural networks? use livelossplot for interactive charts (over 170k downloads!)

- tensors in JavaScript and their vis: github.com/Quantum-Game

- from Zen of Python and Jupyter Notebook to TypeScript and tests: How I Learned to Stop Worrying and Love the Types & Tests

- Bra Ket Vue in Distill RMarkdown (RMarkdown is like Jupyter but better)

- interactive slides created with Jupyter Notebook & RISE